Neural Networks From Scratch Using Python

Neural Networks From Scratch Using Python

what is Neural Network?

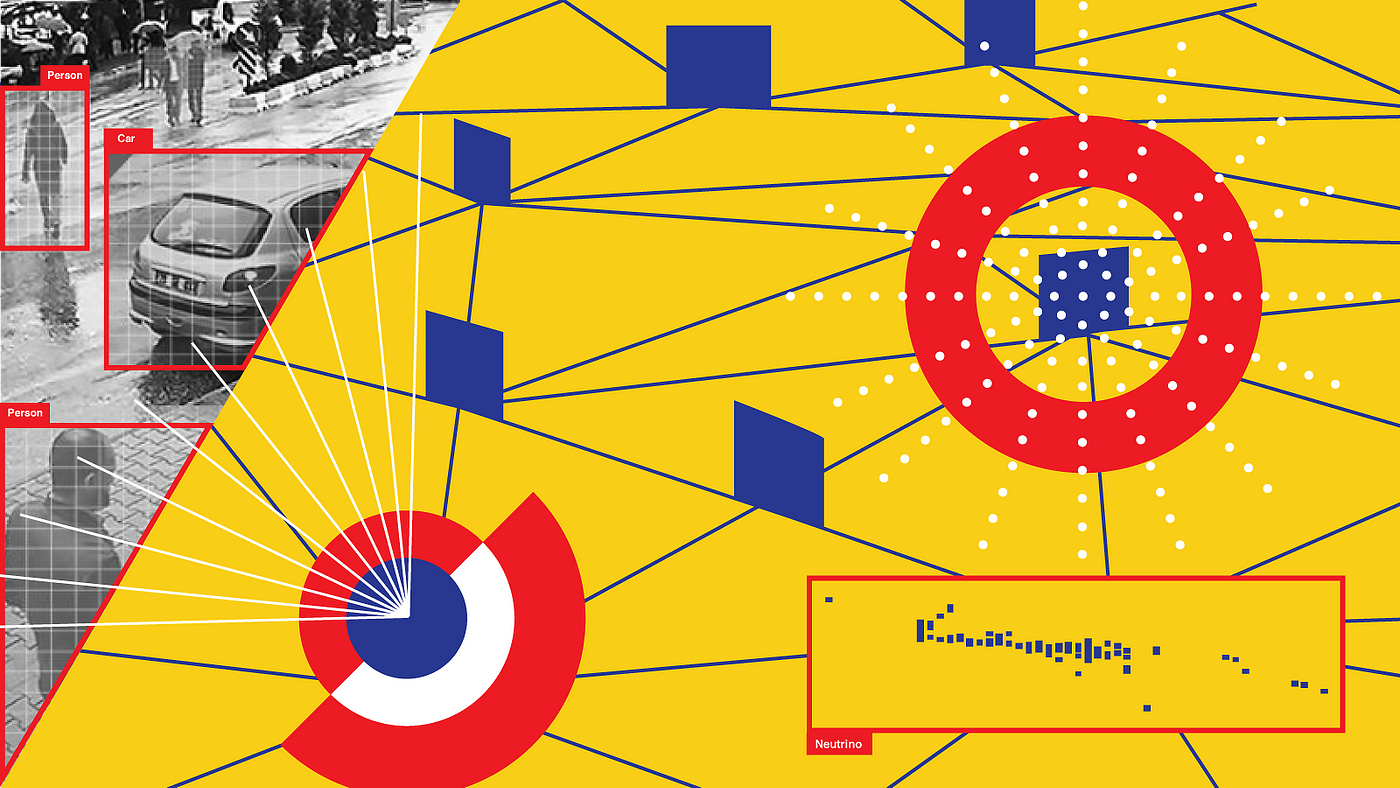

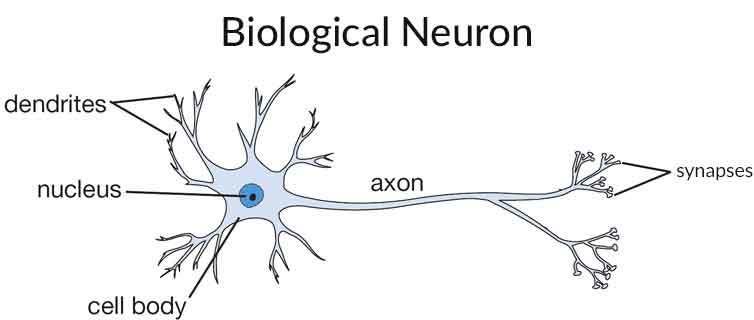

Neural Networks are inspired by biological neuron of Brain

from the dendrites inputs are being transferred to cell body , then the cell body will process it then passes that using axon , this is what Biological Neuron Is .

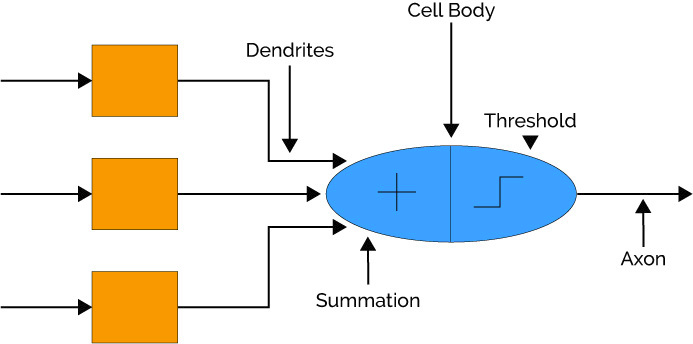

Same process like Brain Neuron

- Inputs are passed

- + Symbol in the cell body denotes adding them together

- Threshold is Activation Function (We will talk that later)

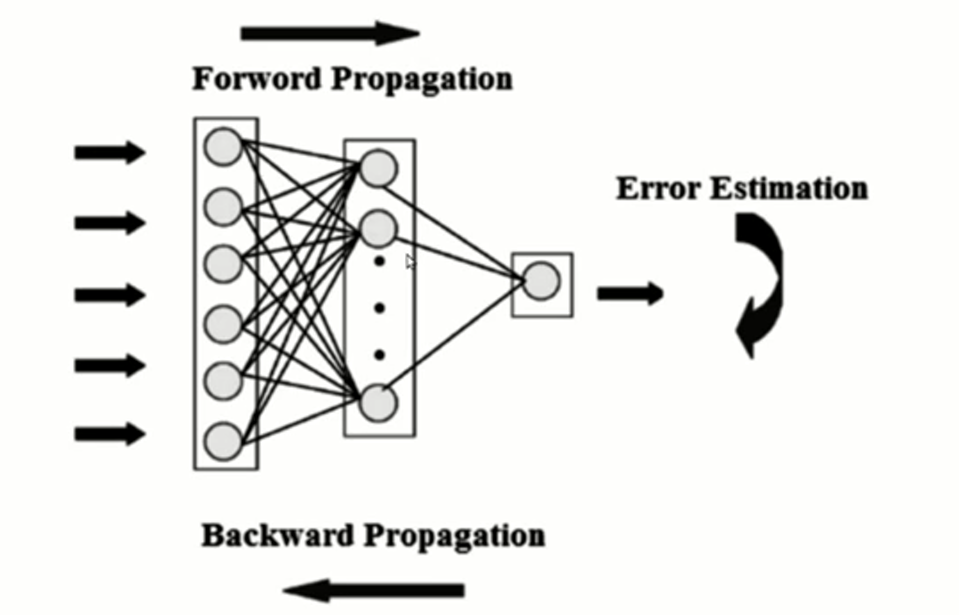

How Neural Network Works ?

Steps :

- Takes the input Values

- Multiplies with the weight adding bias value to it

- Forward Propagation is finished

- now check the error

- then change the Weight values

- Back-propagation is Finished

- Repeat it until the error gets low as possible

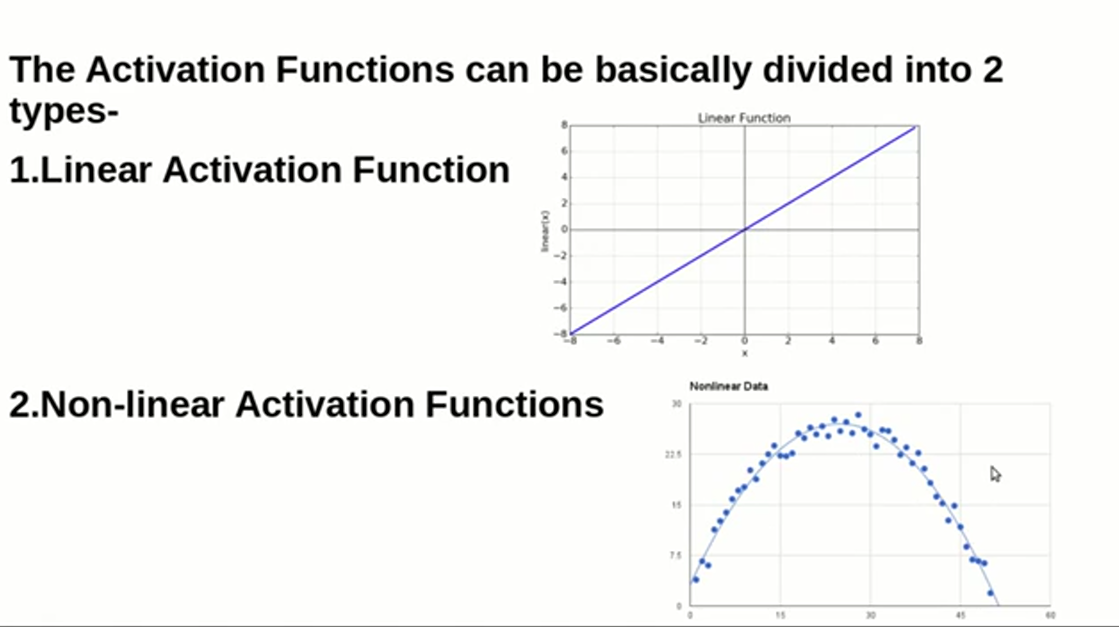

What is the Activation Function?

IF we did not use the activation function means it is equal to the Linear Regression Model,

Non-Linear activation Functions is more overly used because in real-world data-set we will handle nonlinear data-sets a lot so that linear is not much useful

Activation function is used in the hidden layer and output layer

there are many Non-linear Activation functions are available like Sigmoid, tanh , ReLU, etc….

want to know more about the activation function

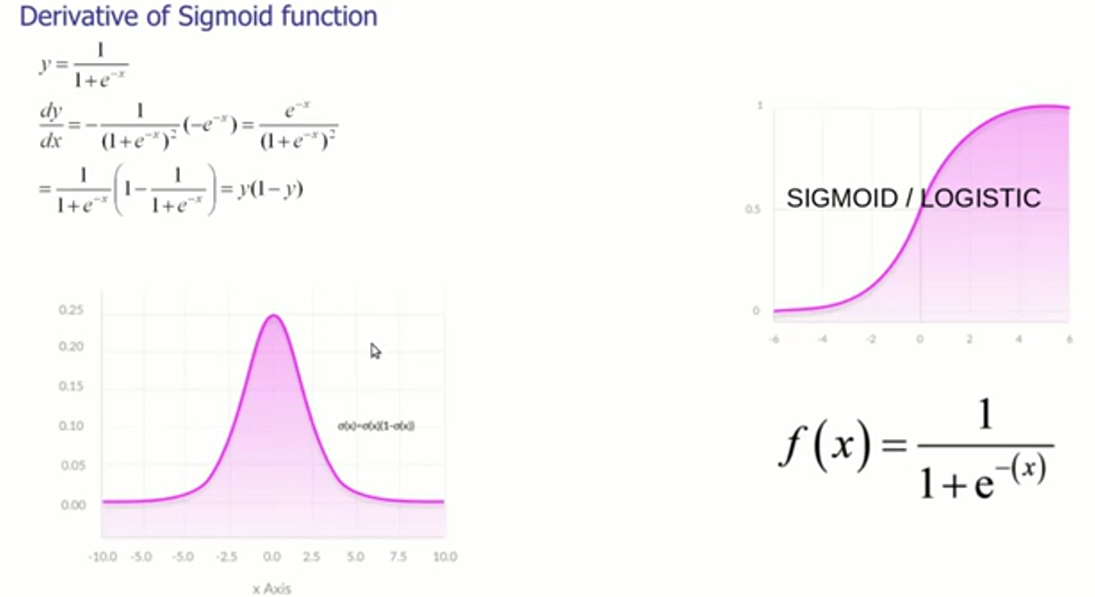

Each Activation function are having their own derivatives

In this Sigmoid Derivatives has been shown, Derivatives are used for updating the Weights

if your problem Regression means in the hidden layer and output layer you should not use Sigmoid you can use ReLU , Classification means Sigmoid we can use

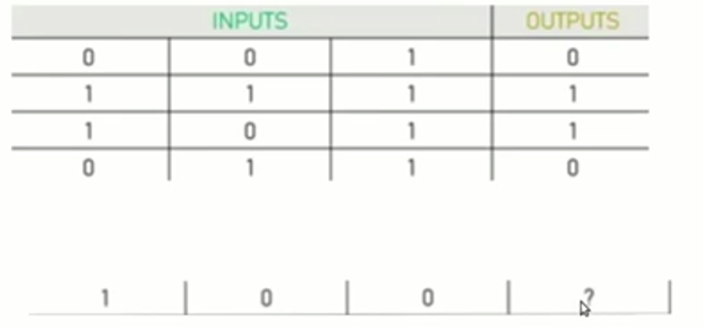

We are having the inputs in which 0 and 1 , outputs are 0 and 1 , so for these, we can use sigmoid

if you have a new situation like for 1 0 0 what will be the output?

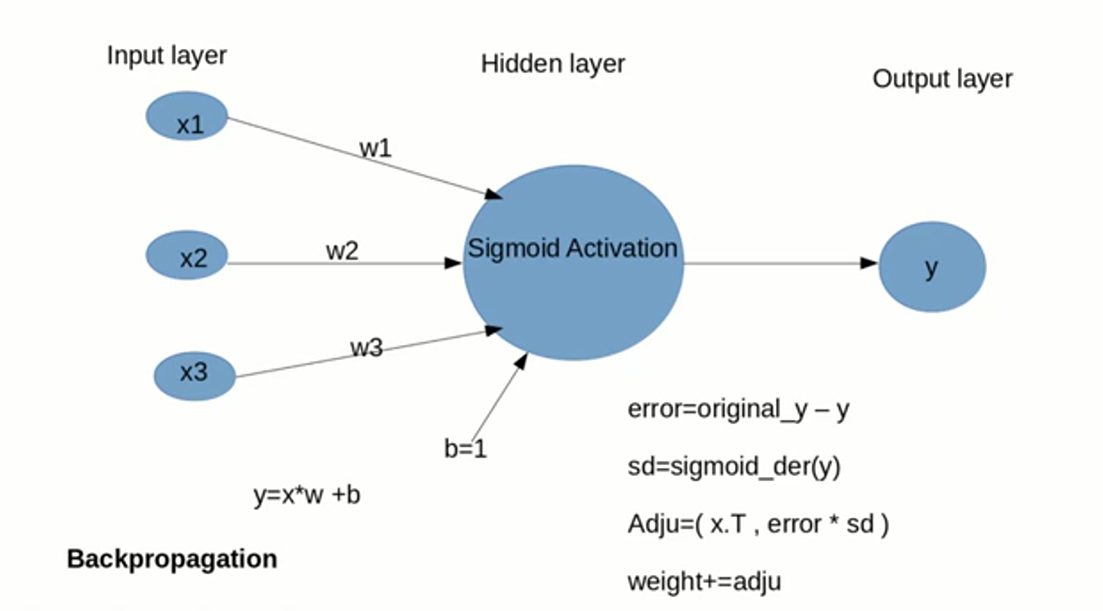

Forward Propagation

Takes the input and multiplying weights with it , adding bias to it, pass it into sigmoid function y is calculated, then doing subtraction with original y to calculated y

error =original_y — calcul_y

calculated y is passed to the sigmoid derivatives stored as sd , then multiplying error and sd, and then doing the matrix multiplication and storing the value as Adju.

Backward Propagation

Then adding Adju values to the weight, the weight has been updated, weight+=adju

repeat it until the error gets low

print(neural_network.think(np.array([A, B, C])))

- In_init_ function setting up the weight randomly

- Defining sigmoid and its derivatives

- the Backpropagation steps in the train function

- think function just passes the values to the neural network

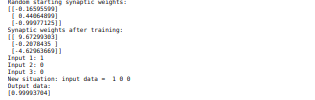

Randomly started weights and finally error-corrected weights , then we have given input as 1 0 0

output for it 0.9993704 nearly 1 almost right, this is how the neural networks are Working

Comments

Post a Comment